Algorithmic trading in 2025 is less about a single “right” stack and more about picking coherent, well-fitted components across research, data, execution, and operations. Below is a pragmatic guide to the choices that matter—and how to combine them into a resilient, cost-aware pipeline that can actually ship and survive live markets.

1) Markets and Time Horizons: pick the battlefield first

Before tools, decide what and how often you trade; this drives every downstream choice.

- Asset classes:

- FX & CFDs/indices: deep liquidity, 24/5, heavy broker differences.

- Equities & ETFs: rich fundamentals and event data, U.S. market microstructure matters.

- Futures: transparent costs, central limit order books, good for trend and seasonality.

- Crypto: 24/7, fragmented venues, exchange risk, latency variance.

- Time horizon:

- Low latency (sub-second to seconds): C++/Rust/C# favored; proximity hosting; FIX/native gateways.

- Intraday minutes to hours: Python or C# with compiled hotspots; robust execution adapters; careful cost modeling.

- Daily/weekly swing or stat-arb: Python first; cloud research; broader datasets.

Rule of thumb: if you can’t measure and control latency + slippage + fees precisely, avoid strategies that require it. Start at horizons where your operational edge is achievable.

2) Data strategy: accuracy beats volume

Your edge is only as real as your data hygiene.

- Vendors & sources: mix official exchange feeds or reputable aggregators for trades/quotes; supplement with fundamentals (filings), macro (central banks), and curated alt-data (news, sentiment, analytics).

- Granularity: use tick or L2 only if you truly exploit microstructure; otherwise consolidate to 1–5 min bars or daily to reduce noise.

- Cleaning: enforce timezone unification, survivorship-bias-free universes, corporate actions, session calendars, and duplicate/zero-volume filters.

- Validation: keep checksums and sample statistics for each ingestion batch; store metadata (vendor, version, timestamp) for auditability.

Cost control: for research, cache to Parquet on object storage; in live, maintain a rolling local cache to avoid vendor round-trips.

3) Research stack: language and libraries

- Python (the default): pandas/NumPy, backtesting.py, Backtrader, vectorbt, Zipline-Reloaded, QSTrader; numba for speedups; TA-Lib or pandas-ta for indicators; scikit-learn, XGBoost, PyTorch/TensorFlow for ML.

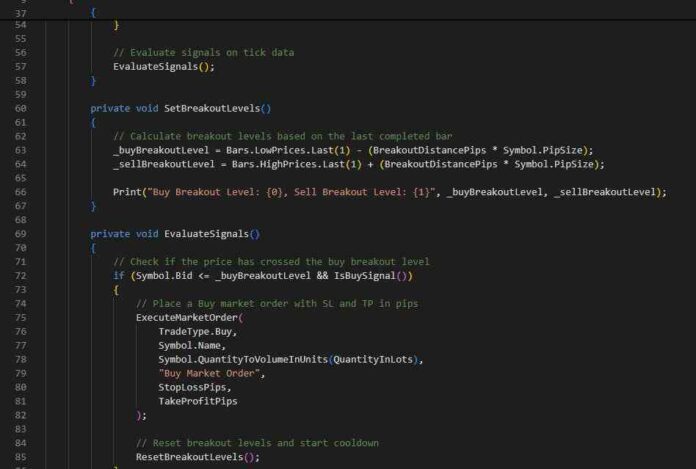

- C#/cAlgo/cTrader & MetaTrader: tight integration with brokers for FX/CFDs; good for execution-adjacent logic; interop with Python via REST/WebSockets.

- C++/Rust: when you truly need microseconds or exchange gateways.

- Lean/QuantConnect (open source engine): one codebase for multi-asset backtests and paper/live (if you like that ecosystem).

- Crypto tooling: CCXT for exchange connectivity; freqtrade for retail-friendly crypto research/deployment.

Choice heuristic: prototype in Python; if the profiler says “hot loop,” move just that loop to numba/Cython or a microservice in C++/Rust—not the entire strategy.

4) Backtesting & simulation: realism over optimism

- Clock model: event-driven backtests for intraday; bar-based for daily.

- Costs: tiered commissions, spreads, slippage models (e.g., volume-based, volatility-scaled, or order-book-derived), exchange fees, borrow costs (for shorts).

- Bias controls: guard against look-ahead, survivorship, and multiple-testing. Use walk-forward splits, purged k-fold cross-validation, and white’s reality check style thinking.

- Fill assumptions: simulate partial fills, queue position, market impact for larger orders.

- Out-of-sample discipline: keep a sealed test set and paper trade before going live.

Golden rule: a rough but honest backtest beats a slick, biased one. If your Alpha only “works” under generous fills, it doesn’t work.

5) Execution & brokerage: connections and routing

- APIs:

- Retail multi-asset: Interactive Brokers (TWS/API), TradeStation, Alpaca (equities), Saxo APIs, and similar.

- FX/CFDs: cTrader Open API, MetaTrader 5 gateway, broker REST/WebSockets; consider FIX for stability/latency.

- Futures: direct exchange via FIX or through FCMs with proper market data entitlements.

- Crypto: exchange REST/WebSockets; careful with rate limits, throttling, and downtime.

- Order types: marketable vs limit, pegged, post-only, IOC/FOK; conditional orders for risk.

- Smart routing & venues: U.S. equities: impact of lit vs dark, odd-lot rules, auction interactions; FX: LP selection, A-book/B-book broker behavior.

- Paper trading: always step through sim → paper → micro-live with tight telemetry.

Reliability tips: separate data and execution processes, implement reconnect logic, idempotent order handling, and replay-safe state machines.

6) Infrastructure & deployment: from laptop to live

- Local → VPS/Cloud → Colo:

- Local for research;

- VPS/Cloud (Linode, AWS, GCP, Azure) for 24/7 bots;

- Colocation only if your edge is latency-sensitive and profitable enough to justify rack costs.

- Containers & orchestration: Docker per strategy; supervisord or systemd for small shops; Kubernetes when you truly need horizontal scaling.

- Scheduling: cron/APScheduler for timed jobs; message queues (Redis, RabbitMQ, Kafka) for event pipelines.

- Observability: structured logging, Prometheus/Grafana or vendor APMs for metrics; log-based alerts (PagerDuty/Slack/Telegram).

- Secrets & keys: managed secret stores; rotate regularly; least-privilege access.

Disaster readiness: hot/warm standby bots, DB backups, kill-switch and position flatten routines, and a runbook you actually rehearse.

7) Strategy design choices: rules, stats, or ML?

- Rule-based/technical: trends, breakouts, mean-reversion, seasonality; explainable, fast to implement.

- Stat-arb: pairs/cointegration, cross-sectional ranking, factor tilts; needs clean cross-sectional data and risk controls.

- Event-driven: earnings, macro prints, filings, analyst changes; requires fast data and tight execution windows.

- Machine learning: tree ensembles for tabular alpha, LSTM/Temporal CNN/Transformers for sequences, regime classifiers; use feature pipelines and strict OOS.

- Reinforcement learning: niche unless you control the full sim fidelity and costs; more ops burden than most expect.

Practical pattern: combine a simple base edge (e.g., volatility-scaled trend) with a learned overlay (regime filter or risk-off switch). Keep the core legible.

8) Risk, sizing, and portfolio construction

- Position sizing: volatility targeting, ATR-based stops, fractional Kelly with caps; avoid single-point failure sizing (all-in).

- Constraints: sector/asset caps, max leverage, max holdings, max daily loss, and exposure limits.

- Correlation control: shrinkage/covariance models; risk parity and equal-risk contribution for baskets; beware unstable correlations in stress.

- TCA & slippage tracking: store arrival price, VWAP, implementation shortfall; feed this back to your backtest assumptions.

- Drawdown governance: soft and hard circuit breakers; auto-de-risk on breach; human sign-off to re-enable.

One-pager policy: write your risk rules as code and plain language; the bot enforces, you audit.

9) Ops, compliance, and auditability

- Version everything: code, data snapshots, parameter sets, model binaries.

- Reproducibility: container image hashes + dataset checksums = deterministic reruns.

- Human-in-the-loop: require approvals for big parameter changes; maintain an operations chat with bot summaries.

- Regulatory hygiene: retain logs, trade records, and market data usage rights; respect exchange/vendor licenses and data redistribution clauses.

SRE mindset: if something can fail, it will—design for graceful degradation, not heroics.

10) LLMs and automation in 2025: helpful, with guardrails

- Use cases that work: code scaffolding, doc parsing (e.g., filings), feature brainstorming, translating research notes, summarizing overnight news, drafting incident reports.

- What not to do: let an LLM tune live parameters without strict bounds and manual approval; never allow it to place orders directly.

- Guardrails: whitelists for functions, unit tests for generated code, and a sandbox where models can’t touch production keys.

Treat LLMs as junior research assistants—fast, tireless, occasionally wrong. Your job is to verify.

11) Three archetype “starter kits”

A) Daily cross-asset swing trader

- Market: equities ETFs + liquid futures (ES, NQ) or FX majors.

- Data: daily bars + fundamentals; reliable split/dividend handling.

- Stack: Python, pandas, backtesting.py/vectorbt; TA-Lib for signals; scikit-learn for regime filter.

- Execution: broker API with day-end and next-open logic; simple limit/market orders.

- Infra: VPS with Docker; cron once/day; Prometheus alerting.

- Risk: vol-targeted unit sizing; 2–3% daily loss cap; portfolio max 10–20 lines.

B) Intraday FX/indices mean-reversion

- Market: FX majors, GER40/US500 CFDs.

- Data: 1-minute bars with reliable spread series.

- Stack: Python research + cTrader/MT5 for live execution; numba-accelerated indicator calcs.

- Execution: limit-first with IOC fallbacks; spread filters; session/time-of-day constraints.

- Infra: low-cost VPS near broker; persistent WebSocket; auto-reconnect.

- Risk: ATR stops; hard daily stop; kill-switch on slippage spike.

C) Stat-arb equity basket (hourly)

- Market: U.S. top 1,000 by liquidity.

- Data: survivorship-free universe; point-in-time fundamentals; hourly bars.

- Stack: Python with feature store (Parquet + metadata), XGBoost for ranking, orthogonalization vs market/sector.

- Execution: VWAP-style slicing; child orders; avoid open/close crowding.

- Infra: cloud research + dedicated VPS for live; message queue for signals → executor.

- Risk: dollar-neutral target; beta and sector caps; dynamic leverage ceilings.

12) A lean, durable workflow

- Hypothesis → minimal prototype (two weeks max).

- Backtest with brutal realism (costs, rejects, delays).

- Paper trade with full telemetry (two to four weeks).

- Micro-live with minimal capital, hard stops, and daily reviews.

- Refine or retire quickly; keep a graveyard of retired ideas and lessons learned.

13) Common traps to avoid

- Optimizing to the backtest UI instead of the market.

- Ignoring operational risk (one flaky VPS can erase months of Sharpe).

- Over-complex feature stacks when a two-rule strategy would do.

- Treating slippage as a rounding error.

- Scaling too early; scale reliability first.