Origins, Pitfalls, Consequences, and Alternatives

In the pantheon of financial risk management tools, few concepts have been as influential—or as controversial—as Value at Risk (VaR). Once hailed as a breakthrough in measuring market risk, VaR has since been blamed for lulling banks and regulators into a false sense of security, contributing to crises from the collapse of Long-Term Capital Management (LTCM) in 1998 to the global financial meltdown of 2008.

The story of VaR is not merely about a statistical measure. It is a cautionary tale about the limits of models, the danger of misplaced confidence, and the eternal tension between quantitative precision and real-world complexity.

Origins of VaR: From JPMorgan to Basel

The seeds of VaR were sown in the early 1990s, when financial institutions were grappling with increasingly complex portfolios. Globalization, derivatives innovation, and the sheer scale of trading demanded a consistent framework for measuring exposure.

JPMorgan played the central role. In 1994, the bank launched its RiskMetrics system, which provided a standardized approach to calculate market risk using historical data and statistical assumptions. At its core, VaR asked a seemingly simple question:

“What is the maximum loss a portfolio could experience over a given time horizon, at a certain confidence level?”

For example, a daily VaR of $10 million at 99% confidence suggests that on 99 out of 100 trading days, losses should not exceed $10 million.

Regulators quickly embraced VaR. The Basel Committee on Banking Supervision incorporated it into the Basel II framework, allowing banks to set capital requirements based on their internal VaR models. What began as a risk management tool soon became the industry standard — a lingua franca of modern finance.

Why VaR Took Off

VaR’s popularity stemmed from several appealing features:

- Simplicity – It condensed complex risk exposures into a single, digestible number.

- Comparability – VaR allowed managers, boards, and regulators to compare risk across portfolios, desks, and institutions.

- Integration – It provided a framework that could be applied to multiple asset classes, from equities to derivatives.

- Regulatory blessing – Once Basel codified it, VaR became institutionalized, ensuring its widespread adoption.

In a world where complexity was spiraling, VaR seemed like a beacon of clarity. But beneath its elegance lay structural weaknesses.

The Pitfalls of Value at Risk

1. False Sense of Security

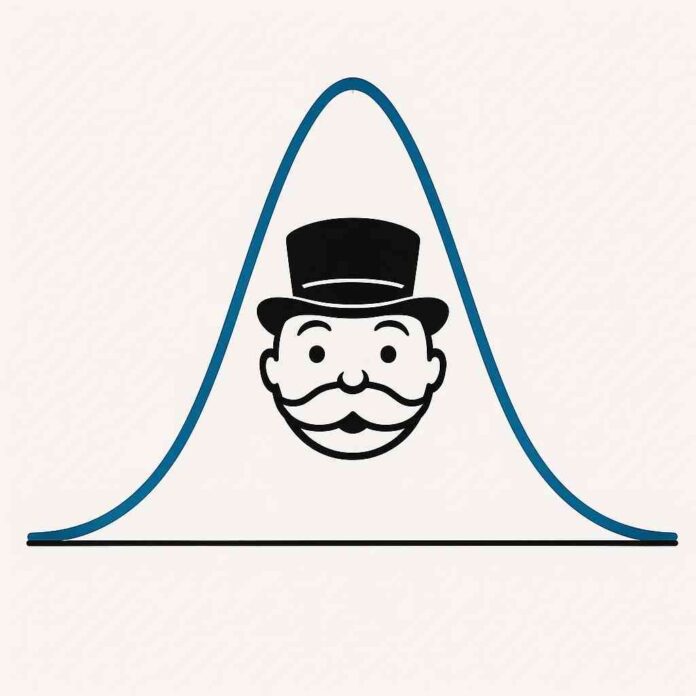

Perhaps the greatest flaw of VaR is illusion of precision. A single number, backed by mathematical models, conveys confidence. But that number hides enormous assumptions: normal distributions, stable correlations, and historical continuity. In reality, markets are non-linear, correlations spike in crises, and rare events happen more often than models predict.

2. Ignoring the Tail

By design, VaR only measures risk up to a given confidence level (e.g., 95% or 99%). It says nothing about what happens in the worst 1% or 5% of scenarios. This omission is fatal: it is precisely in that tail where catastrophic losses occur. LTCM, for instance, failed because it ignored the extreme outcomes outside its models.

3. Backward-Looking Nature

VaR relies heavily on historical data. But financial markets evolve; past correlations and volatilities often break down under stress. Before 2007, mortgage-backed securities appeared low-risk based on history. VaR models embedded that complacency, blinding institutions to the systemic risks building up.

4. Model Risk

Different methodologies yield different VaR figures:

- Historical simulation – uses past returns to simulate risk.

- Variance-covariance – assumes normal distribution and linear relationships.

- Monte Carlo simulation – runs scenarios with random variables.

Each method carries its own assumptions and weaknesses. A portfolio’s VaR can vary dramatically depending on the chosen approach.

5. Incentive Distortions

Once regulators accepted VaR as the standard, banks had incentives to optimize around it. They engineered portfolios that looked safe under VaR but harbored hidden risks in the tails. In essence, VaR became a box-ticking exercise, exploited by sophisticated traders who understood its blind spots.

Consequences: From LTCM to the Great Crisis

Long-Term Capital Management (1998)

LTCM, run by Nobel laureates and star traders, used highly leveraged arbitrage strategies. Its models suggested risks were minuscule—often within the comfort zone of VaR. But when Russia defaulted in 1998, correlations spiked, spreads blew out, and LTCM’s losses dwarfed anything VaR predicted. The Federal Reserve had to coordinate a $3.6 billion bailout.

Global Financial Crisis (2007–2008)

VaR played an even larger role in the subprime crisis. Banks reported seemingly safe VaR numbers while loading up on mortgage-backed securities and credit derivatives. The models assumed housing markets across the U.S. were uncorrelated and that extreme price moves were improbable. When reality diverged, the tail losses were catastrophic.

The irony was stark: the very tool meant to protect the system gave institutions and regulators a false sense of control. VaR didn’t cause the crisis, but it amplified complacency.

Critiques from Within

Several prominent voices criticized VaR long before 2008:

- Nassim Nicholas Taleb, author of The Black Swan, argued that VaR ignores fat tails and underestimates rare events.

- Philippe Jorion, an early advocate, acknowledged that VaR is a tool, not a guarantee, and must be supplemented with stress testing.

- Even JPMorgan insiders admitted that VaR, when misused, can conceal more than it reveals.

The consensus emerged: VaR is not useless, but dangerous when treated as a comprehensive measure of risk.

Alternatives and Improvements

The post-crisis era has seen a push for better risk metrics. Several approaches aim to address VaR’s shortcomings:

1. Conditional Value at Risk (CVaR)

Also called Expected Shortfall, CVaR measures the average loss in the worst-case scenarios beyond the VaR threshold. Unlike VaR, it captures the tail and provides insight into how bad things can get. Basel III now requires banks to use Expected Shortfall for certain calculations.

2. Stress Testing and Scenario Analysis

Rather than rely solely on historical data, stress tests simulate extreme but plausible scenarios—such as a sudden interest rate spike, geopolitical conflict, or liquidity freeze. This approach acknowledges that crises often defy statistical norms.

3. Extreme Value Theory (EVT)

Borrowed from statistics, EVT explicitly models the tails of distributions, focusing on rare, catastrophic events. While technically demanding, it provides a better framework for understanding risks that conventional VaR ignores.

4. Liquidity-Adjusted Risk Measures

Traditional VaR assumes positions can be liquidated instantly. In reality, market liquidity evaporates in crises. Liquidity-adjusted VaR incorporates the cost and time of unwinding positions, producing a more realistic picture of potential losses.

5. Integrated Risk Management

Modern frameworks increasingly emphasize combining multiple metrics: VaR, CVaR, stress tests, and qualitative assessments. No single number can capture the complexity of risk.

The Future of Risk Measurement

The debate over VaR is not just technical—it’s philosophical. Can risk truly be quantified in a single number? Or is risk management ultimately about humility, recognizing the limits of models and preparing for the unknown?

Financial institutions today still calculate VaR, but it is now one tool among many. Regulators, chastened by 2008, insist on broader measures. Investors demand transparency about assumptions and tail risks. The cultural shift is toward resilience, not just measurement.

Conclusion

Value at Risk was born from a noble ambition: to tame the chaos of markets with mathematics. Its elegance and simplicity made it irresistible to bankers, regulators, and boards. But in its precision lay its peril. By ignoring the extremes, by relying on yesterday’s data, and by fostering complacency, VaR left the financial system exposed when crises struck.

The lesson is not that models are useless, but that models are maps, not territories. VaR can help navigate, but it cannot capture every storm. True risk management requires imagination, skepticism, and preparation for the improbable.

As Jim Simons once said about models: “They work… until they don’t.” The same is true of VaR. Its legacy is a reminder that the greatest risk often lies not in what the numbers tell us, but in what they leave unsaid.